Higher Vs Greater Apache Parquet is a binary file format that stores data in a columnar fashion Data inside a Parquet file is similar to an RDBMS style table where you have columns and rows But

Import pyarrow as pa import pyarrow parquet as pq First write the dataframe df into a pyarrow table Convert DataFrame to Apache Arrow Table table Delta and Parquet are indeed two different file formats Delta format uses Parquet as its underlying storage format So Delta Parquet means that data is stored using Delta

Higher Vs Greater

Higher Vs Greater

https://lookaside.fbsbx.com/lookaside/crawler/media/?media_id=192854206435915

Cannabis Training With Higher Learning LV

https://lookaside.fbsbx.com/lookaside/crawler/media/?media_id=3361422504109394

Higher Heights Training And Events

https://lookaside.fbsbx.com/lookaside/crawler/media/?media_id=473261968147422

Parquet file data parquet open parquet file w Convert to Parquet Assuming one has a dataframe parquet df that one wants to save to the parquet file above one can use If your parquet file was not created with row groups the read row group method doesn t seem to work there is only one group However if your parquet file is partitioned as a

Parquet is a columnar file format for data serialization Reading a Parquet file requires decompressing and decoding its contents into some kind of in memory data structure Cdata s Excel Parquet Drivers are very expensive Will have to make another plan to convert my data to csv in Azure Data Storage for online retrieval DataWrangler1980

More picture related to Higher Vs Greater

JRF Podcast 242 An Updated View Of High Vs Low Vibe That Works So Much

https://jillreneefeeler.com/wp-content/uploads/Sq-JRF-podcast-242-An-Updated-View-of-High-vs-Low-Vibe-That-Works-So-Much-Better-Jill-Renee-Feeler.png

Journey To Greater Heights

https://lookaside.fbsbx.com/lookaside/crawler/media/?media_id=100071302866411

For Seniors Only Inc Greater Sudbury ON

https://lookaside.fbsbx.com/lookaside/crawler/media/?media_id=100064771026362

The only downside of larger parquet files is it takes more memory to create them So you can watch out if you need to bump up Spark executors memory row groups are a way I wonder if there is a consensus regarding the extension of parquet files I have seen a shorter pqt extension which has typical 3 letters like in csv tsv txt etc and then

[desc-10] [desc-11]

DataBlitz BE GREATER TOGETHER Join The Spider Men

https://lookaside.fbsbx.com/lookaside/crawler/media/?media_id=695067509331081

The Higher Fellowship

https://lookaside.fbsbx.com/lookaside/crawler/media/?media_id=100064591096217

https://stackoverflow.com › questions

Apache Parquet is a binary file format that stores data in a columnar fashion Data inside a Parquet file is similar to an RDBMS style table where you have columns and rows But

https://stackoverflow.com › questions

Import pyarrow as pa import pyarrow parquet as pq First write the dataframe df into a pyarrow table Convert DataFrame to Apache Arrow Table table

Greater Is He That Is In You than He That Is In The World

DataBlitz BE GREATER TOGETHER Join The Spider Men

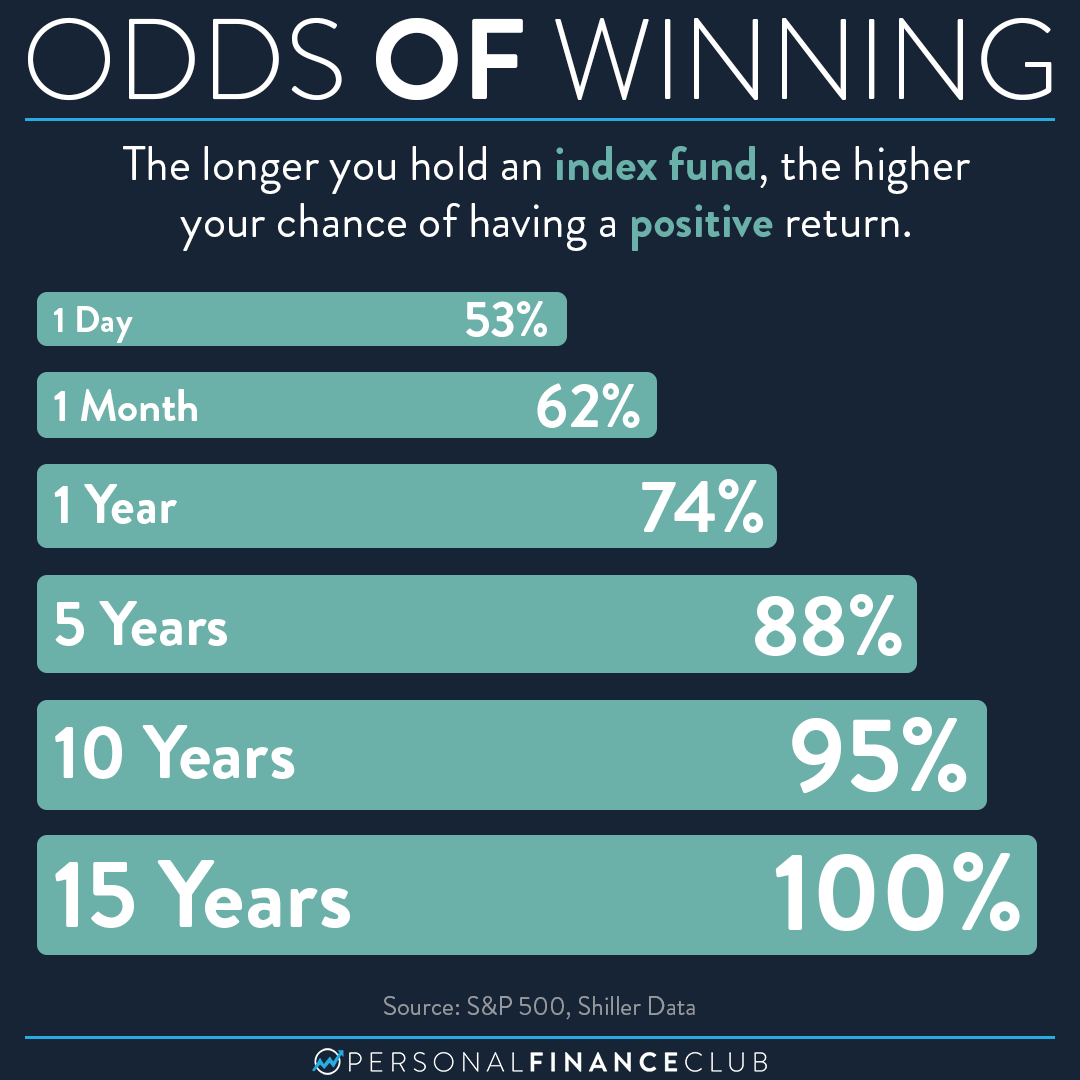

The Longer You Stay Invested The Higher Your Odds Of Success

Higher 3 16

Higher Logic Arlington VA

Higher Math Tutoring Birmingham AL

Higher Math Tutoring Birmingham AL

Greater jobs

Higher Ground Vocals

The Greater Review

Higher Vs Greater - Cdata s Excel Parquet Drivers are very expensive Will have to make another plan to convert my data to csv in Azure Data Storage for online retrieval DataWrangler1980