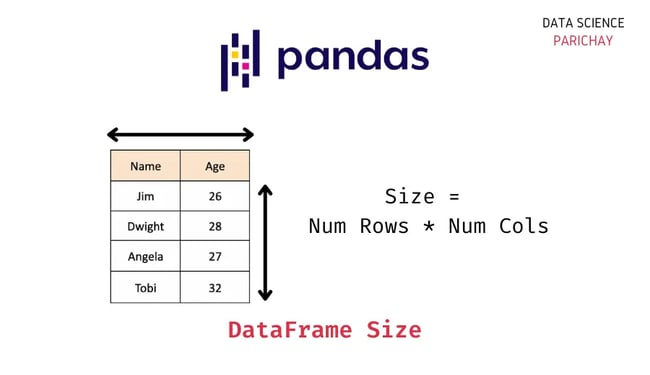

Size Of Dataframe S size and len s index are about the same in terms of speed But I recommend len df Note size is an attribute and it returns the number of elements count of rows for any Series DataFrames also define a size attribute which returns the same result as df shape 0 df shape 1 Non Null Row Count DataFrame count and Series count

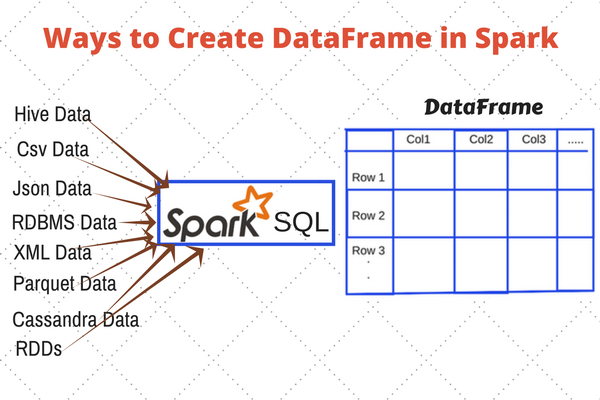

So you can estimate it s size just by multiplying the size of the dtype it contains with the dimensions of the array For example if you have 1000 rows with 2 np int32 and 5 np float64 columns your DataFrame will have one 2x1000 np int32 array and one 5x1000 np float64 array which is 4bytes 2 1000 8bytes 5 1000 48000 bytes Officially you can use Spark s SizeEstimator in order to get the size of a DataFrame But it seems to provide inaccurate results as discussed here and in other SO topics You can use RepartiPy instead to get the accurate size of your DataFrame as follows

Size Of Dataframe

Size Of Dataframe

https://i.ytimg.com/vi/gFVQzXMmhqI/maxresdefault.jpg

Haerin Image 319797 Asiachan KPOP Image Board

https://static.asiachan.com/Haerin.full.319797.jpg

Whatsapp Profile Picture Size Converter Infoupdate

https://www.guidingtech.com/wp-content/uploads/How_to_Add_Any_Size_Picture_to_Your_WhatsApp_Profile.jpg

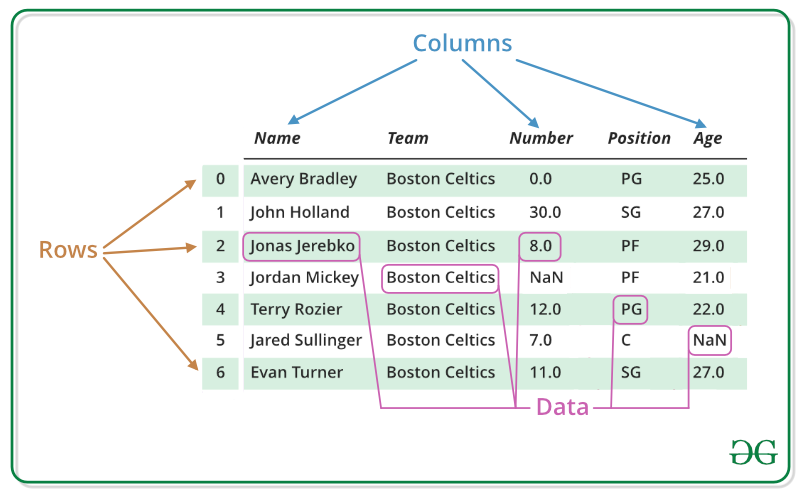

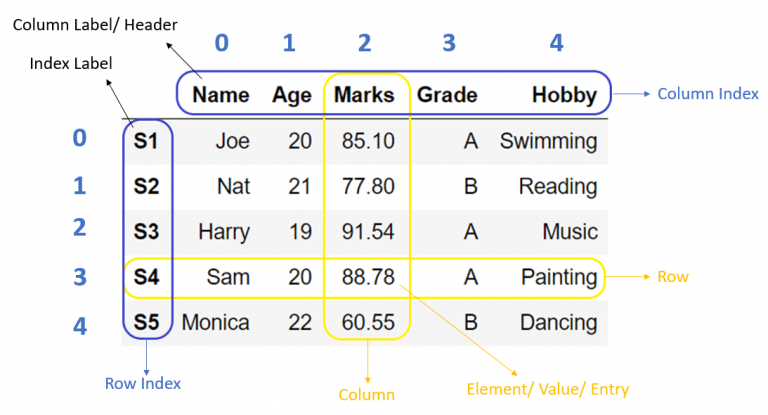

Maximum size of pandas dataframe Ask Question Asked 10 years 11 months ago Modified 4 years 11 months ago The size attribute gives the number of memory cell allocated to DataFrame whereas count gives the number of elements that are actually present in DataFrame For example You can see that even though there are 3 rows in DataFrame its size is 6 This answer covers size and count difference with respect to DataFrame and not pandas Series

One way to make a pandas dataframe of the size you wish is to provide index and column values on the creation of the dataframe df pd DataFrame index range numRows columns range numCols This creates a dataframe full of nan s where all columns are of data type object I am trying to find out the size shape of a DataFrame in PySpark I do not see a single function that can do this In Python I can do this data shape Is there a similar function in PySpark Th

More picture related to Size Of Dataframe

Emma Y Medium

https://miro.medium.com/v2/resize:fit:2400/1*[email protected]

Python Marco De Datos De Pandas muestra Barcelona Geeks

https://media.geeksforgeeks.org/wp-content/uploads/out2-12.jpg

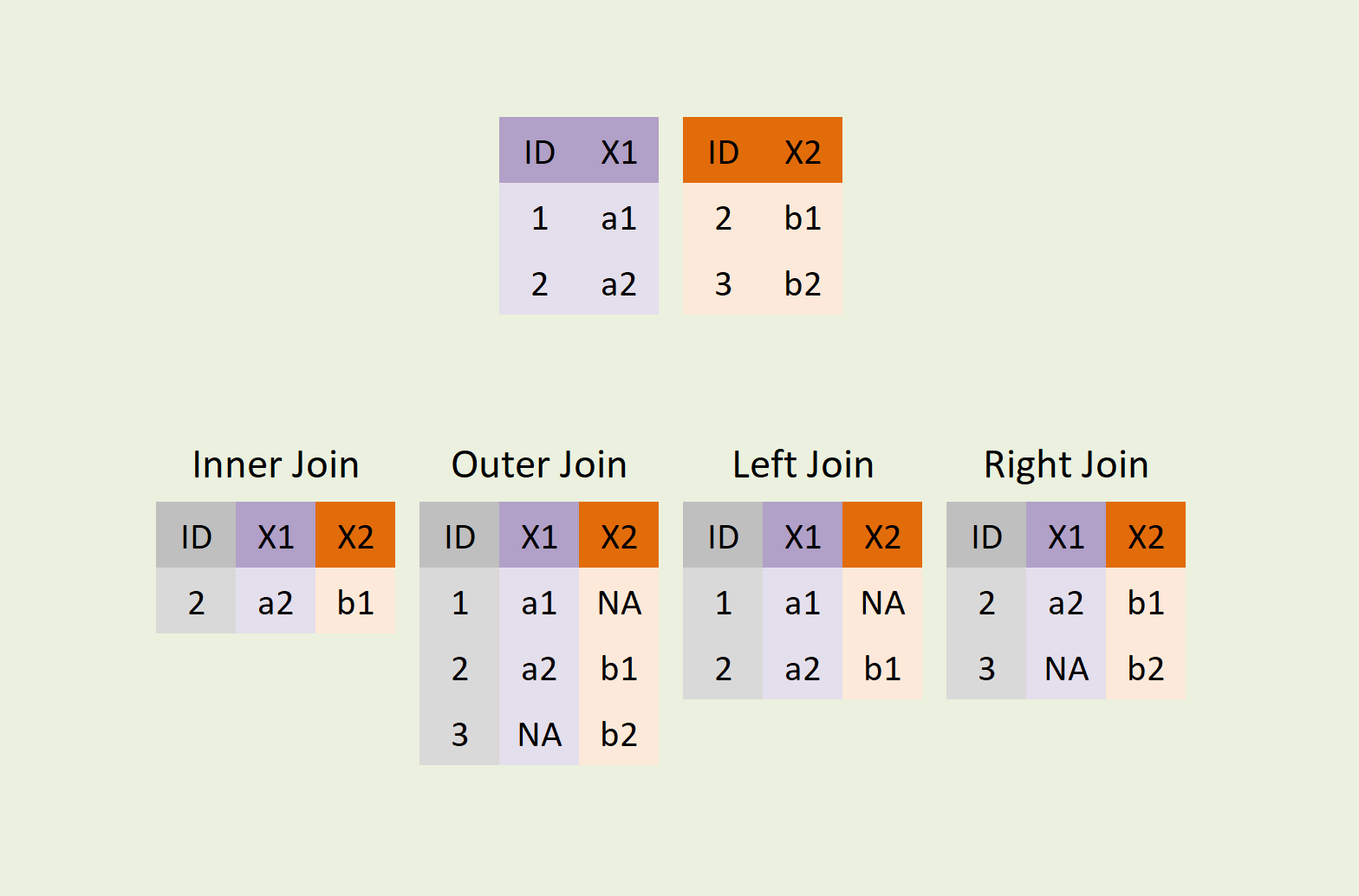

Python Pandas Dataframe Type Printable Online

https://statisticsglobe.com/wp-content/uploads/2021/12/join-types-python-merge-programming.png

Unfortunately for me I don t see this taking any effect with pandas 0 23 0 applying this style to my df and the target column in it the target column s size remains the same rather than being the value provided for width It s probably worth emphasizing this You can use RepartiPy to get the accurate size of your DataFrame as follows import repartipy Use this if you have enough executor memory to cache the whole DataFrame If you have NOT enough memory i e too large DataFrame use repartipy SamplingSizeEstimator instead with repartipy SizeEstimator spark spark df df as

[desc-10] [desc-11]

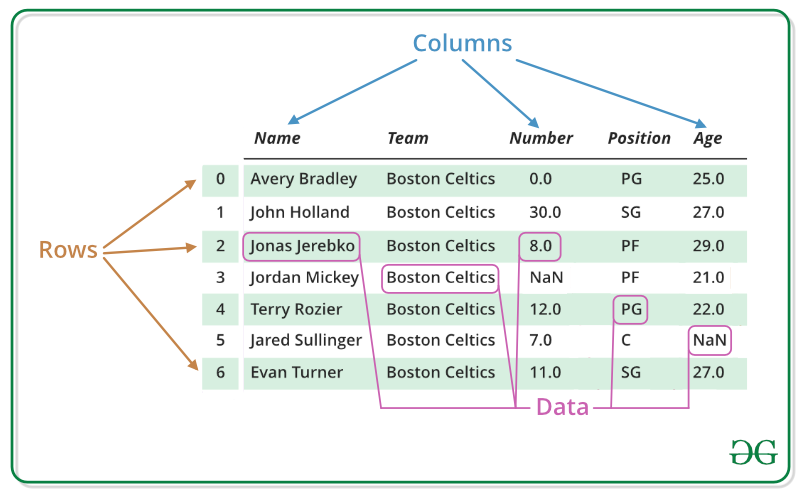

python pandas DataFrame Wildkid1024

https://media.geeksforgeeks.org/wp-content/uploads/finallpandas.png

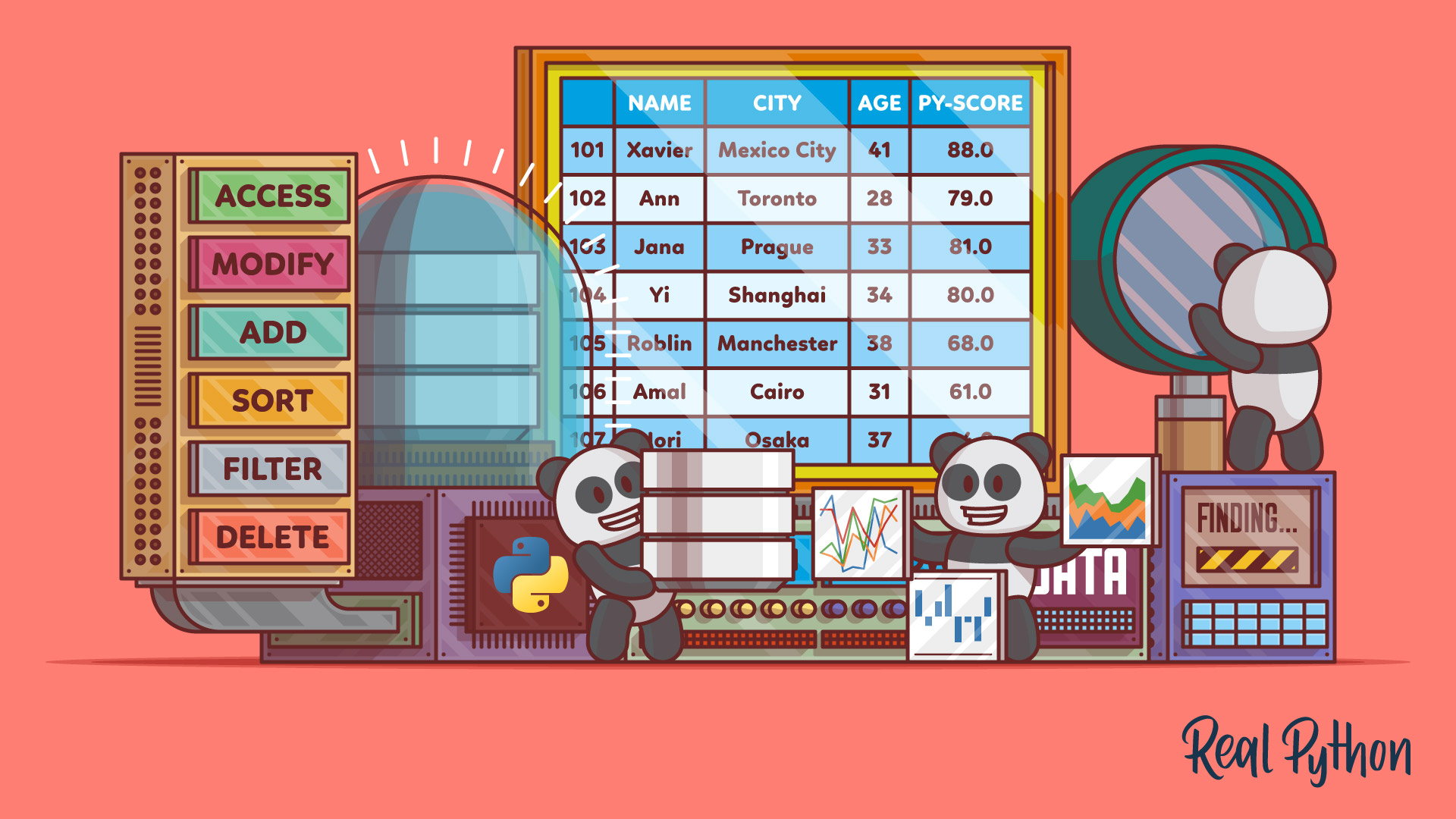

Pandas For Data Science Learning Path Real Python

https://files.realpython.com/media/A-Guide-to-Pandas-Dataframes_Watermarked.7330c8fd51bb.jpg

https://stackoverflow.com › questions

S size and len s index are about the same in terms of speed But I recommend len df Note size is an attribute and it returns the number of elements count of rows for any Series DataFrames also define a size attribute which returns the same result as df shape 0 df shape 1 Non Null Row Count DataFrame count and Series count

https://stackoverflow.com › questions

So you can estimate it s size just by multiplying the size of the dtype it contains with the dimensions of the array For example if you have 1000 rows with 2 np int32 and 5 np float64 columns your DataFrame will have one 2x1000 np int32 array and one 5x1000 np float64 array which is 4bytes 2 1000 8bytes 5 1000 48000 bytes

How To Get The Size Of A DataFrame Praudyog

python pandas DataFrame Wildkid1024

If Size Of Dataframe And Numpy Are Too Big It Cant Be Visualized

6 Inbuilt Data Structures In R With Practical Examples TechVidvan

Python Pandas DataFrame

Spark DataFrame Basic

Spark DataFrame Basic

How To Find Pandas DataFrame Size Shape And Dimensions Properties

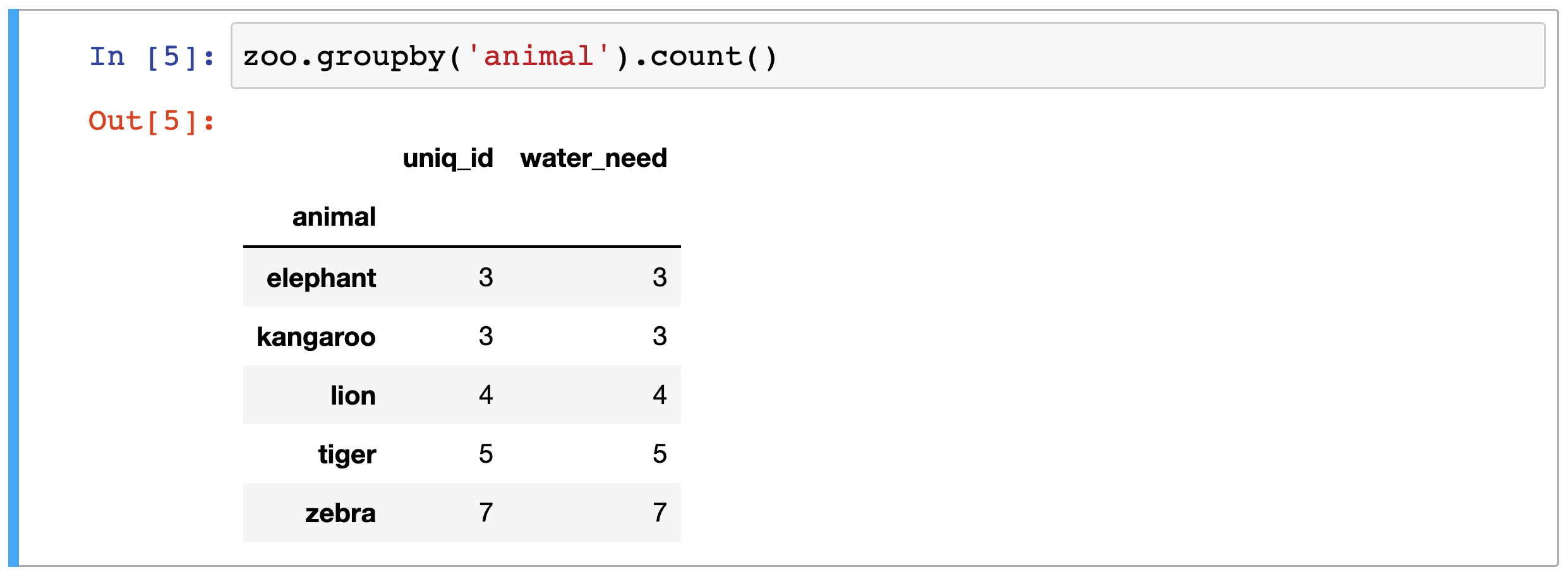

Pandas Group By Count Data36

Python Pandas DataFrame Plot

Size Of Dataframe - The size attribute gives the number of memory cell allocated to DataFrame whereas count gives the number of elements that are actually present in DataFrame For example You can see that even though there are 3 rows in DataFrame its size is 6 This answer covers size and count difference with respect to DataFrame and not pandas Series