F1 Score Formula Since the product of three numbers is always less than or equal to zero if one of the numbers is less than or equal to zero we can see that the left hand side of the equation is

I was confused about the differences between the F1 score Dice score and IoU intersection over union By now I found out that F1 and Dice mean the same thing right and IoU has a very Unbalanced class but one class if more important that the other For e g in Fraud detection it is more important to correctly label an instance as fraudulent as opposed to

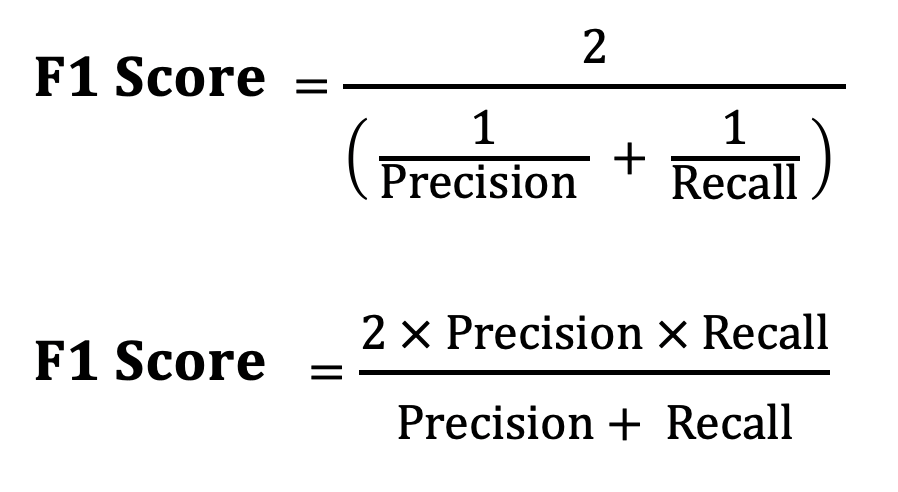

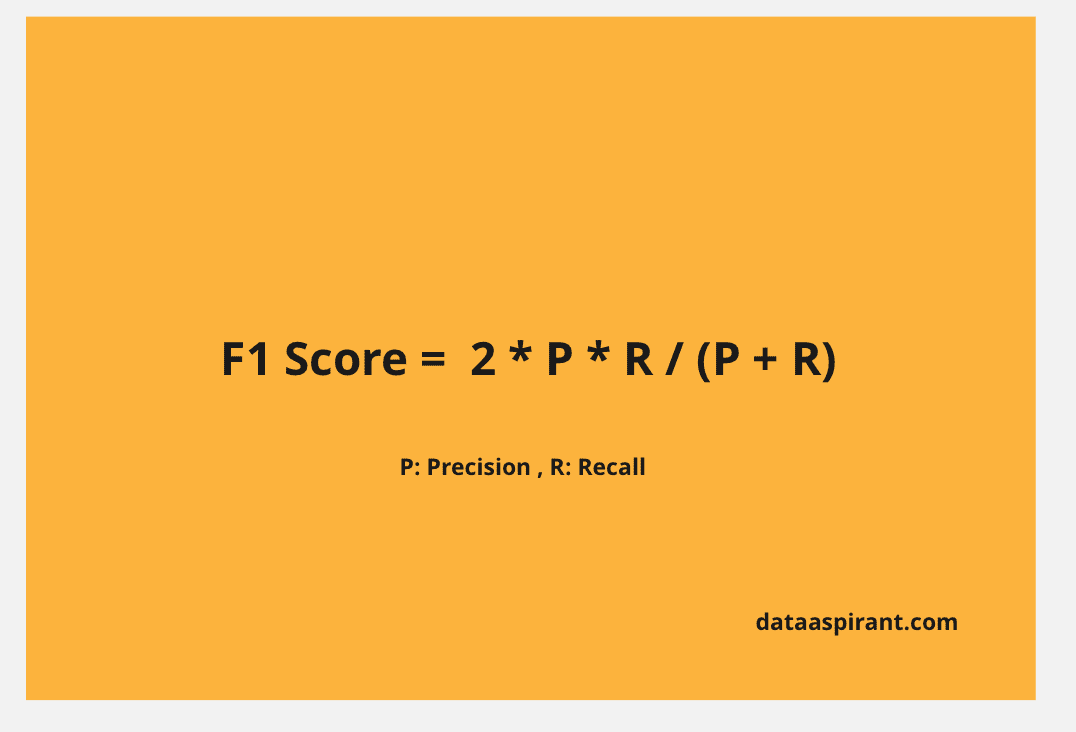

F1 Score Formula

F1 Score Formula

https://i.ytimg.com/vi/JYQupddZkzc/maxresdefault.jpg

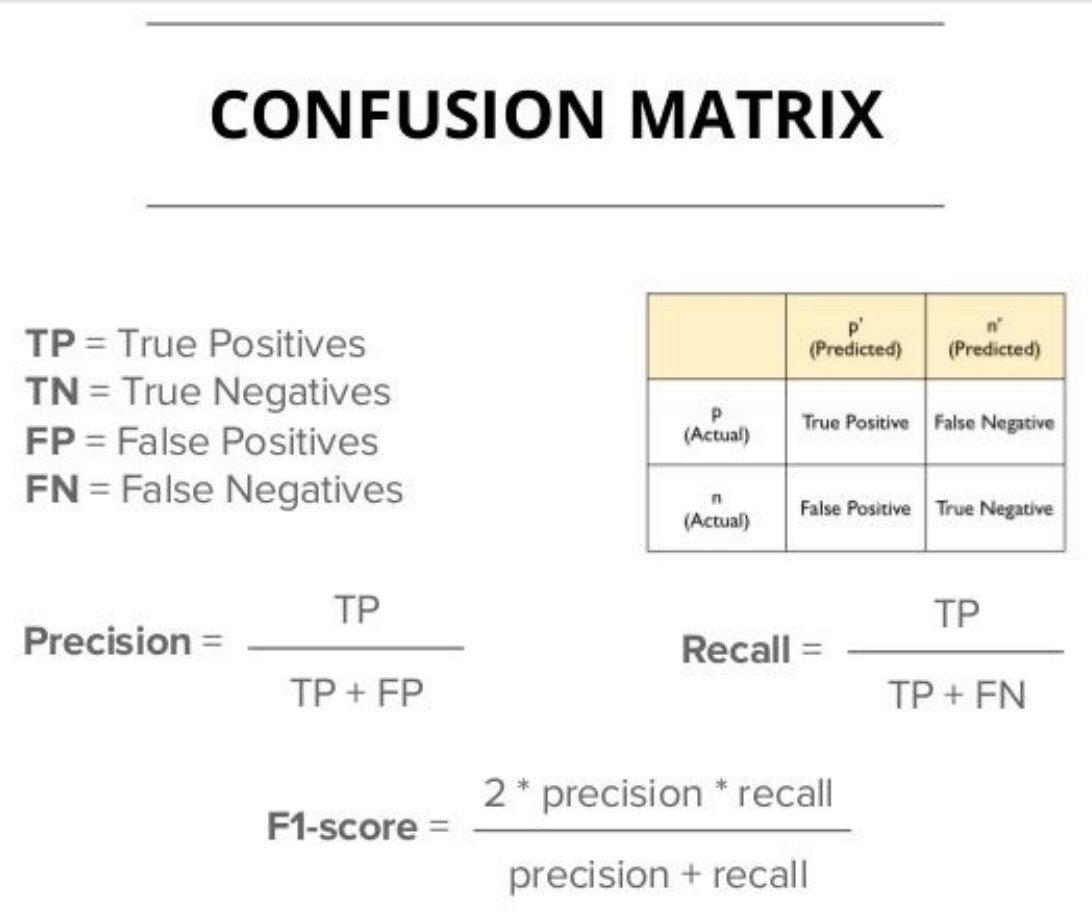

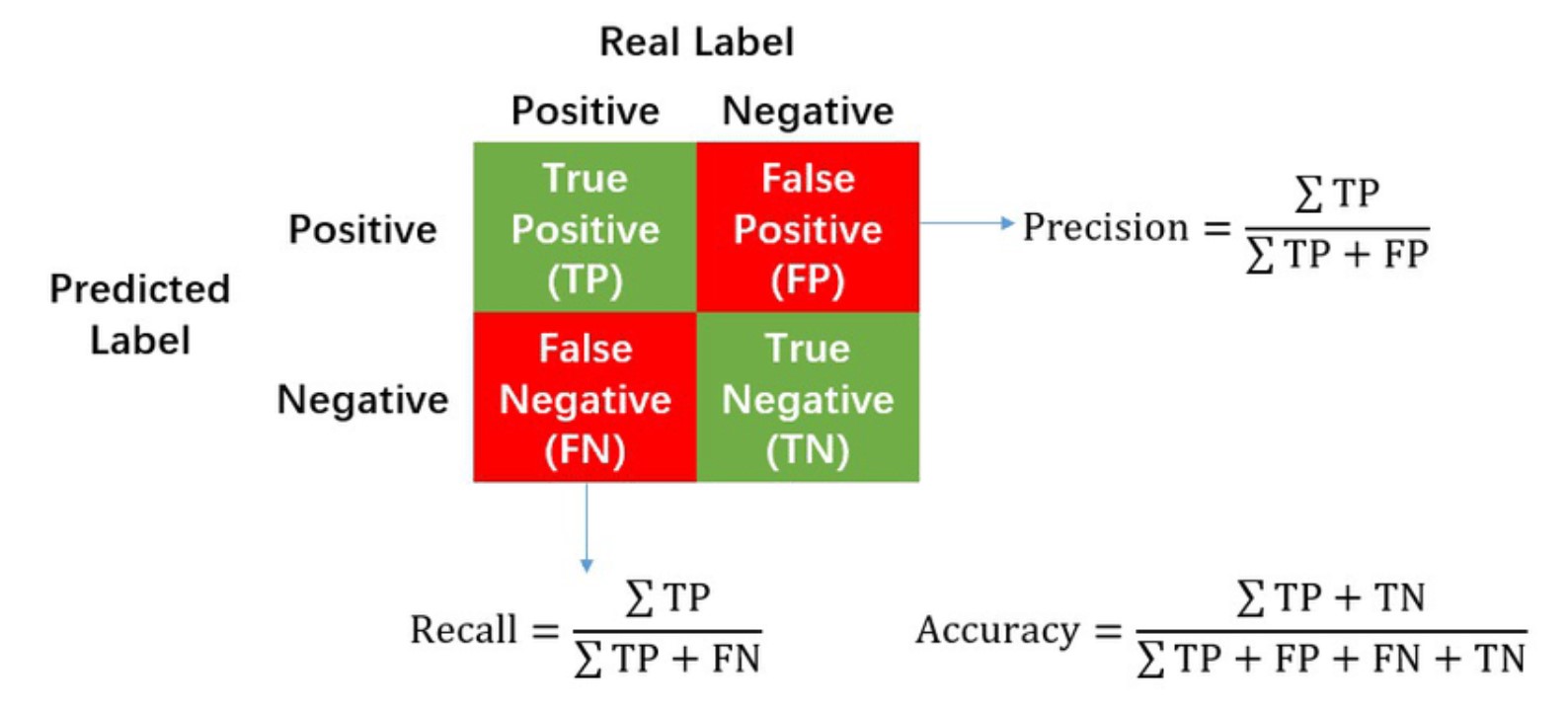

Confusion Matrix Precision And Recall Explained KDnuggets 41 OFF

https://www.researchgate.net/publication/367393140/figure/fig4/AS:11431281114710300@1674648981676/Confusion-matrix-Precision-Recall-Accuracy-and-F1-score.jpg

Confusion Matrix For Your Multi Class Machine Learning 57 OFF

https://miro.medium.com/v2/resize:fit:898/1*7tC4-fUHtcffvXGcGTJJtg.png

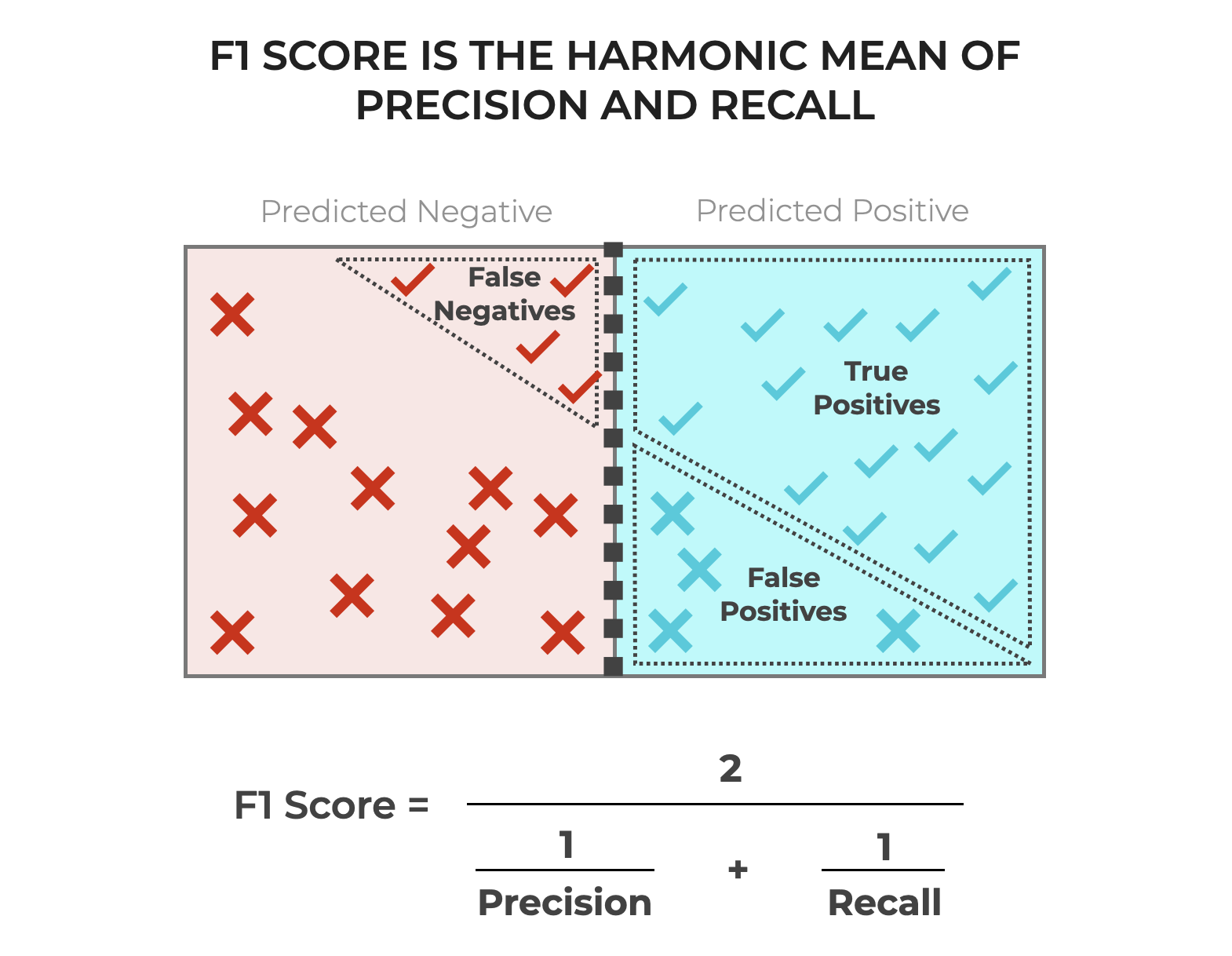

The following formula calculates the F1 score In other words Fscore 2 Precision Recall F1 custom 0 1 F1 class0 0 45 F1 class1 0 1 F1 class2 0 35 F1 class3 Regarding this I d expect you give more weight to the F1 score of class 3 Am I missing

The Scikit Learn package in Python has two metrics f1 score and fbeta score Each of these has a weighted option where the classwise F1 scores are multiplied by the How can I calculate precision and recall so It become easy to calculate F1 score The normal confusion matrix is a 2 x 2 dimension However when it become 3 x 3 I don t know

More picture related to F1 Score Formula

Day 59 Of 60daysOfMachineLearning Percision Recall F1 Precision

https://pbs.twimg.com/media/FlIBdJYWAAAPd1R.jpg

.png?auto=compress,format)

F1 Score Definition Encord

https://images.prismic.io/encord/0ef9c82f-2857-446e-918d-5f654b9d9133_Screenshot+(49).png?auto=compress,format

F1 Score In Machine Learning Intro Calculation 42 OFF

https://cdn.prod.website-files.com/5d7b77b063a9066d83e1209c/63b413d5d222e7befdc535e8_639c3b7247e46b63fc2aa60b_HERO%2520Orange.jpeg

I would advise you to calculate F score precision and recall for the case in which your classifier predicts all negatives and then with the actual algorithm If it is a skewed set you might want Under such situation using F1 score could be a better metric And F1 score is a common choice for information retrieval problem and popular in industry settings Here is an

[desc-10] [desc-11]

Confusion Matrix Accuracy Precision Recall F1 Score 54 OFF

https://www.researchgate.net/publication/355985914/figure/fig2/AS:1087884408958976@1636383263110/Confusion-matrix-The-accuracy-precision-recall-F1-score-and-AUC-mainly-rely-on-the.png

Main Score

https://i.ytimg.com/vi/ji48Lz6amMc/maxresdefault.jpg

https://stats.stackexchange.com › questions

Since the product of three numbers is always less than or equal to zero if one of the numbers is less than or equal to zero we can see that the left hand side of the equation is

https://stats.stackexchange.com › questions

I was confused about the differences between the F1 score Dice score and IoU intersection over union By now I found out that F1 and Dice mean the same thing right and IoU has a very

F1 Score Vs Accuracy Which Should You Use

Confusion Matrix Accuracy Precision Recall F1 Score 54 OFF

F1 Score Formula

Precision Recall

Precision Recall

Classification Metrics And Their Use Cases CloudxLab Blog

Classification Metrics And Their Use Cases CloudxLab Blog

F1 Score Formula Roby Vinnie

F1 Score Explained Sharp Sight

F1 Score Formula In Machine Learning Bryn Marnia

F1 Score Formula - How can I calculate precision and recall so It become easy to calculate F1 score The normal confusion matrix is a 2 x 2 dimension However when it become 3 x 3 I don t know