How Does Lora Work Ai Low rank adaptation LoRA is a technique used to adapt machine learning models to new contexts It can adapt large models to specific uses by adding lightweight pieces to the

LoRAs Learnable Reversible and Adjustable operations are among the most exciting implementations of this ground breaking technology In this article we re going The significance of LoRA for fine tuning What is Catastrophic forgetting and how LoRA handles it Can we use LoRA for any ML model What is QLoRA Codes to fine tune

How Does Lora Work Ai

How Does Lora Work Ai

https://i.ytimg.com/vi/qI4a9JHO2sc/maxresdefault.jpg

Bad Results When Work With LoRA Issue 29 Opparco stable diffusion

https://user-images.githubusercontent.com/84510337/223908617-a59083f3-ee16-46c6-9e7b-6347a46713e4.png

Parameter Efficient LLM Finetuning With Low Rank Adaptation LoRA

https://lightningaidev.wpengine.com/wp-content/uploads/2023/04/lora-1-1024x423.jpg

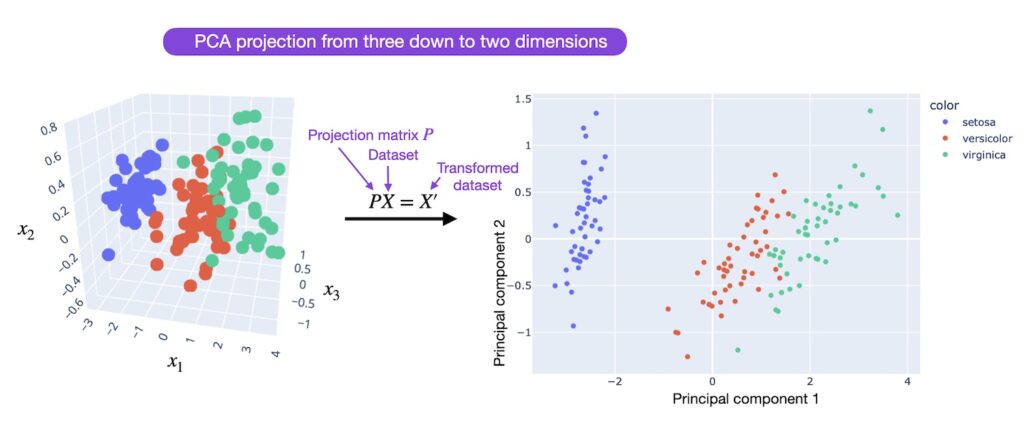

Low rank adaptation LoRA is a faster cheaper way of turning LLMs and other foundation models into specialists IBM Research is innovating with LoRAs to make AI models In this article we ll explain how LoRA works in plain English You will gain an understanding of how it compares to full parameter fine tuning and what is going on behind

In this article we ll delve into how LoRA works why it s a game changer and how it can transform the way we approach AI adaptation Key Concepts Low Rank Adaptation and Explore Low Rank Adaptation LoRA an innovative technique revolutionizing AI fine tuning This guide delves into LoRA s mechanics benefits and applications

More picture related to How Does Lora Work Ai

What Are LoRA Models And How To Use Them In AUTOMATIC1111 Stable

https://i0.wp.com/stable-diffusion-art.com/wp-content/uploads/2023/03/lora-cover-1200.png

What Does The R In LoRA Mean And Why Tweaking It Is Cool

https://user-images.githubusercontent.com/35953539/207823943-fc0faa9d-0c7f-493e-a3f4-b37bc0a81d81.jpg

What Are LoRA Models And How To Use Them In AUTOMATIC1111 Stable

https://stable-diffusion-art.com/wp-content/uploads/2023/03/cover2-1024x576.jpg

Low rank adaptation LoRA refers to a method for retraining an existing large language model LLM for high performance at specific tasks By reducing the training An illustration of LoRA architecture LoRA is inspired by a 2020 Meta research titled Intrinsic Dimensionality Explains the Effectiveness of Language Model Fine Tuning

LoRA is a technique used for fine tuning large models At a high level here is how LoRA works It keeps the original model unchanged and adds small changeable parts to each How does LoRA work in Stable Diffusion LoRA short for Low Rank Adaptation improves Stable Diffusion s image creation by tailoring particular aspects such as characters

Stable Diffusion LoRA

https://p3-juejin.byteimg.com/tos-cn-i-k3u1fbpfcp/88bd5d9db4c5407ba26aeb0f100b2a68~tplv-k3u1fbpfcp-zoom-crop-mark:1512:1512:1512:851.image?

How Does LoRA Work NightCafe Creator

http://nightcafe.studio/cdn/shop/articles/how-does-lora-work_1200x1200.jpg?v=1698763440

https://www.ibm.com › think › topics › lora

Low rank adaptation LoRA is a technique used to adapt machine learning models to new contexts It can adapt large models to specific uses by adding lightweight pieces to the

https://medium.com › rendernet › demystifying-loras...

LoRAs Learnable Reversible and Adjustable operations are among the most exciting implementations of this ground breaking technology In this article we re going

Bad Results When Work With LoRA Issue 29 Opparco stable diffusion

Stable Diffusion LoRA

Stable Diffusion Merging LoRA Models How To YouTube

LoRA Low Rank Adaptation Of LLMs YouTube

Answering Your Questions How Did We Meet Do We Homeschool Does Lora

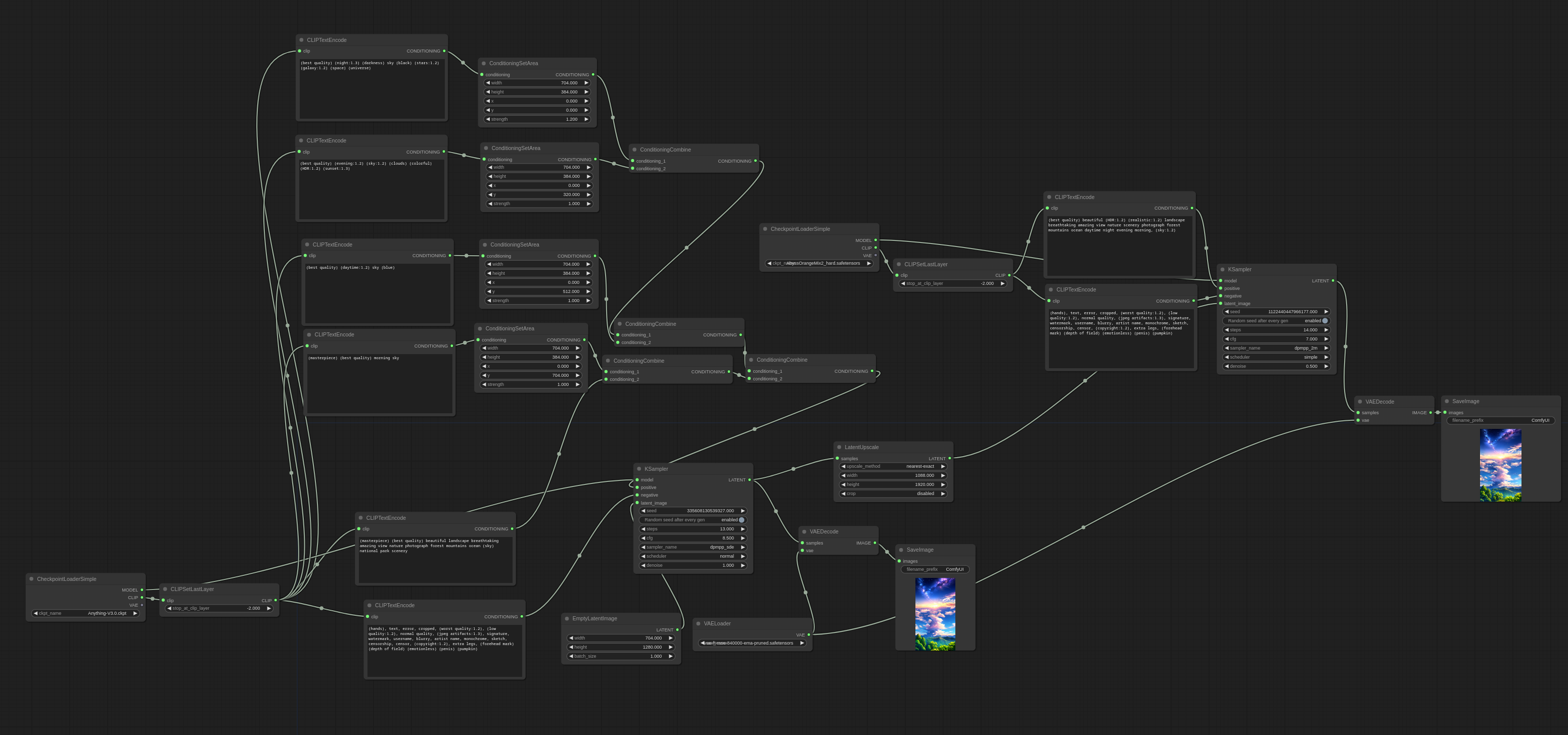

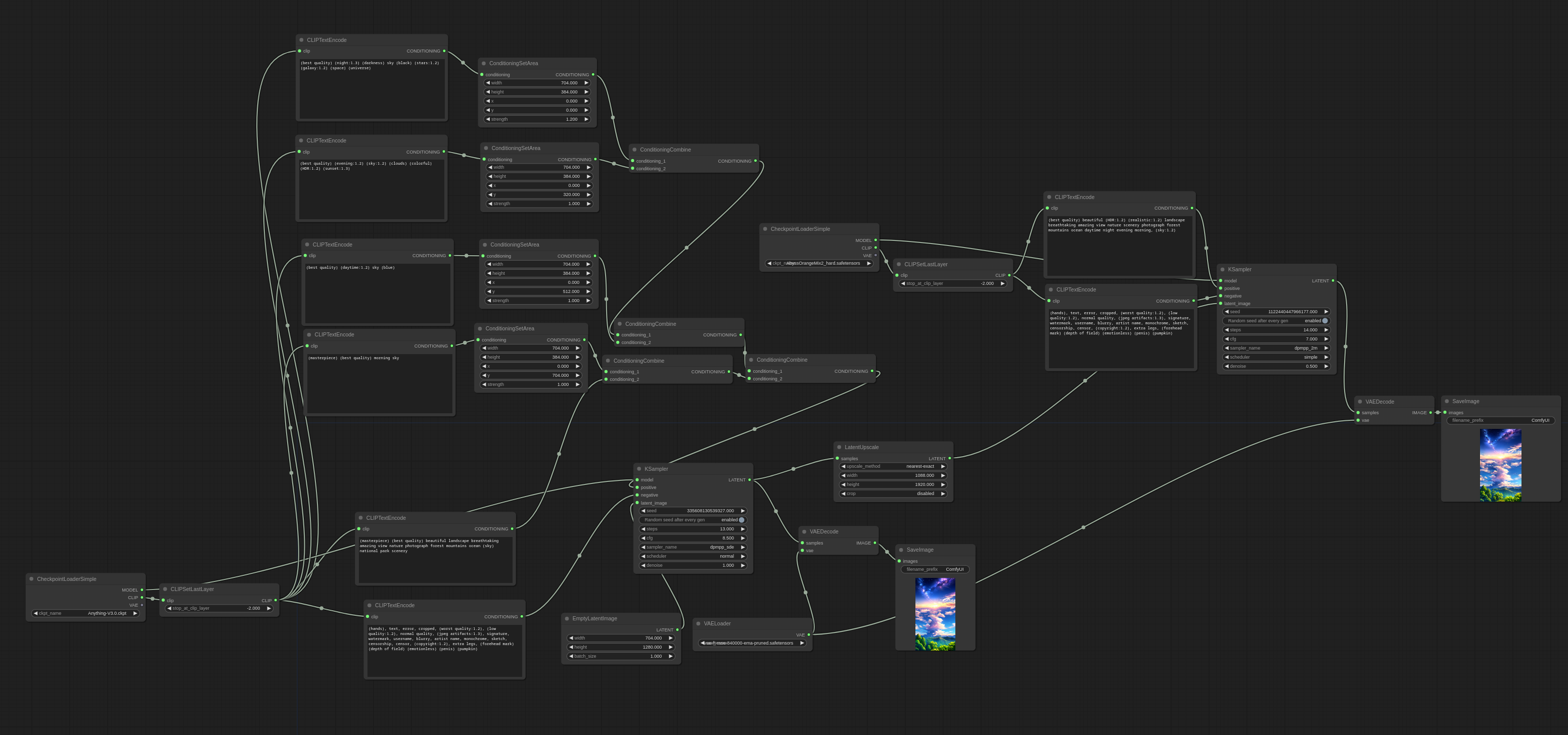

Area Composition Examples ComfyUI examples

Area Composition Examples ComfyUI examples

Parameter Efficient Fine Tuning With Low Rank Adaptation LoRA For

LoRA Low Rank Adaptation Of Large Language Models

What Is LoRa In IoT Connectivity Solutions Technology Gov Capital

How Does Lora Work Ai - In this article we ll explain how LoRA works in plain English You will gain an understanding of how it compares to full parameter fine tuning and what is going on behind